OpenVINO™ Documentation¶

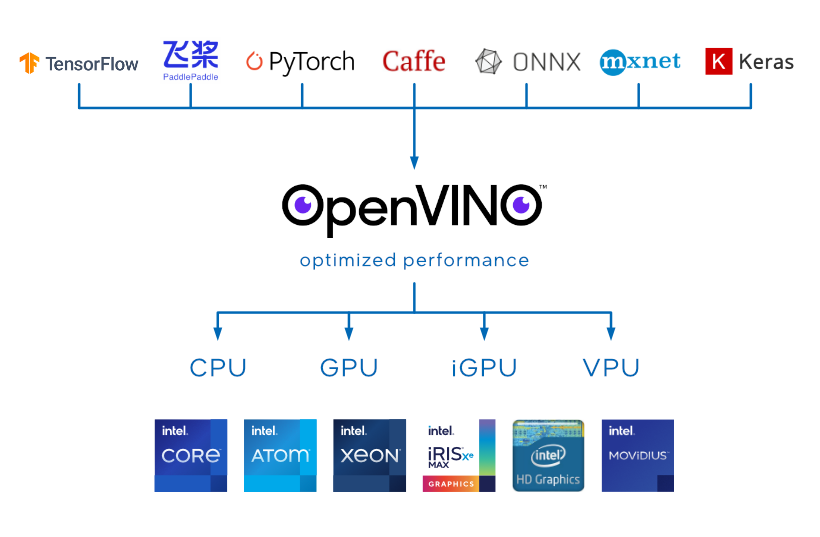

OpenVINO™ is an open-source toolkit for optimizing and deploying AI inference.

- Boost deep learning performance in computer vision, automatic speech recognition, natural language processing and other common tasks

- Use models trained with popular frameworks like TensorFlow, PyTorch and more

- Reduce resource demands and efficiently deploy on a range of Intel® platforms from edge to cloud

Check the full range of supported hardware in the

Supported Devices page and see how it stacks up in our

Performance Benchmarks page.

Supports deployment on Windows, Linux, and macOS.

Train, Optimize, Deploy

* The ONNX format is also supported, but conversion to OpenVINO is recommended for better performance.

Want to know more?

Get Started

Learn how to download, install, and configure OpenVINO.

Open Model Zoo

Browse through over 200 publicly available neural networks and pick the right one for your solution.

Model Optimizer

Learn how to convert your model and optimize it for use with OpenVINO.

Tutorials

Learn how to use OpenVINO based on our training material.

Samples

Try OpenVINO using ready-made applications explaining various use cases.

DL Workbench

Learn about the alternative, web-based version of OpenVINO. DL Workbench container installation Required.

OpenVINO™ Runtime

Learn about OpenVINO's inference mechanism which executes the IR, ONNX, Paddle models on target devices.

Tune & Optimize

Model-level (e.g. quantization) and Runtime (i.e. application) -level optimizations to make your inference as fast as possible.

Performance

Benchmarks

View performance benchmark results for various models on Intel platforms.