Optimize Preprocessing¶

Introduction¶

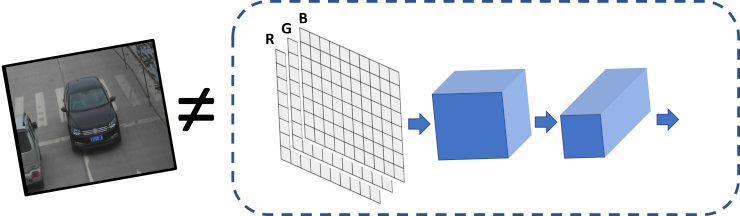

When your input data don’t perfectly fit to Neural Network model input tensor - this means that additional operations/steps are needed to transform your data to format expected by model. These operations are known as “preprocessing”.

Example¶

Consider the following standard example: deep learning model expects input with shape {1, 3, 224, 224}, FP32 precision, RGB color channels order, and requires data normalization (subtract mean and divide by scale factor). But you have just a 640x480 BGR image (data is {480, 640, 3}). This means that we need some operations which will:

Convert U8 buffer to FP32

Transform to

planarformat: from{1, 480, 640, 3}to{1, 3, 480, 640}Resize image from 640x480 to 224x224

Make

BGR->RGBconversion as model expectsRGBFor each pixel, subtract mean values and divide by scale factor

Even though all these steps can be relatively easy implemented manually in application’s code before actual inference, it is possible to do it with Preprocessing API. Reasons to use this API are:

Preprocessing API is easy to use

Preprocessing steps will be integrated into execution graph and will be performed on selected device (CPU/GPU/VPU/etc.) rather than always being executed on CPU. This will improve selected device utilization which is always good.

Preprocessing API¶

Intuitively, Preprocessing API consists of the following parts:

Tensor: Declare user’s data format, like shape, layout, precision, color format of actual user’s data

Steps: Describe sequence of preprocessing steps which need to be applied to user’s data

Model: Specify Model’s data format. Usually, precision and shape are already known for model, only additional information, like layout can be specified

Note: All model’s graph modification shall be performed after model is read from disk and before it is being loaded on actual device.

PrePostProcessor object¶

ov::preprocess::PrePostProcessor class allows specifying preprocessing and postprocessing steps for model read from disk.

ov::Core core;

std::shared_ptr<ov::Model> model = core.read_model(model_path);

ov::preprocess::PrePostProcessor ppp(model);from openvino.preprocess import PrePostProcessor

from openvino.runtime import Core

core = Core()

model = core.read_model(model=xml_path)

ppp = PrePostProcessor(model)Declare user’s data format¶

To address particular input of model/preprocessor, use ov::preprocess::PrePostProcessor::input(input_name) method

ov::preprocess::InputInfo& input = ppp.input(input_name);

input.tensor()

.set_element_type(ov::element::u8)

.set_shape({1, 480, 640, 3})

.set_layout("NHWC")

.set_color_format(ov::preprocess::ColorFormat::BGR);from openvino.preprocess import ColorFormat

from openvino.runtime import Layout, Type

ppp.input(input_name).tensor() \

.set_element_type(Type.u8) \

.set_shape([1, 480, 640, 3]) \

.set_layout(Layout('NHWC')) \

.set_color_format(ColorFormat.BGR)Here we’ve specified all information about user’s input:

Precision is U8 (unsigned 8-bit integer)

Data represents tensor with {1,480,640,3} shape

Layout is “NHWC”. It means that ‘height=480, width=640, channels=3’

Color format is

BGR

Declare model’s layout¶

Model’s input already has information about precision and shape. Preprocessing API is not intended to modify this. The only thing that may be specified is input’s data layout

// `model's input` already `knows` it's shape and data type, no need to specify them here

input.model().set_layout("NCHW");# `model's input` already `knows` it's shape and data type, no need to specify them here

ppp.input(input_name).model().set_layout(Layout('NCHW'))Now, if model’s input has {1,3,224,224} shape, preprocessing will be able to identify that model’s height=224, width=224, channels=3. Height/width information is necessary for ‘resize’, and channels is needed for mean/scale normalization

Preprocessing steps¶

Now we can define sequence of preprocessing steps:

input.preprocess()

.convert_element_type(ov::element::f32)

.convert_color(ov::preprocess::ColorFormat::RGB)

.resize(ov::preprocess::ResizeAlgorithm::RESIZE_LINEAR)

.mean({100.5, 101, 101.5})

.scale({50., 51., 52.});

// Not needed, such conversion will be added implicitly

// .convert_layout("NCHW");from openvino.preprocess import ResizeAlgorithm

ppp.input(input_name).preprocess() \

.convert_element_type(Type.f32) \

.convert_color(ColorFormat.RGB) \

.resize(ResizeAlgorithm.RESIZE_LINEAR) \

.mean([100.5, 101, 101.5]) \

.scale([50., 51., 52.])

# .convert_layout(Layout('NCHW')); # Not needed, such conversion will be added implicitlyHere:

Convert U8 to FP32 precision

Convert current color format (BGR) to RGB

Resize to model’s height/width. Note that if model accepts dynamic size, e.g. {?, 3, ?, ?},

resizewill not know how to resize the picture, so in this case you should specify target height/width on this step. See alsoov::preprocess::PreProcessSteps::resize()Subtract mean from each channel. On this step, color format is RGB already, so

100.5will be subtracted from each Red component, and101.5will be subtracted fromBlueone.Divide each pixel data to appropriate scale value. In this example each

Redcomponent will be divided by 50,Greenby 51,Blueby 52 respectivelyNote: last

convert_layoutstep is commented out as it is not necessary to specify last layout conversion. PrePostProcessor will do such conversion automatically

Integrate steps into model¶

We’ve finished with preprocessing steps declaration, now it is time to build it. For debugging purposes it is possible to print PrePostProcessor configuration on screen:

std::cout << "Dump preprocessor: " << ppp << std::endl;

model = ppp.build();print(f'Dump preprocessor: {ppp}')

model = ppp.build()After this, model will accept U8 input with {1, 480, 640, 3} shape, with BGR channels order. All conversion steps will be integrated into execution graph. Now you can load model on device and pass your image to model as is, without any data manipulation on application’s side

See Also¶

ov::preprocess::PrePostProcessorC++ class documentation