Classification Model Tutorial¶

Accuracy measurement is an important stage of profiling your model in the DL Workbench. Along with performance, accuracy is crucial for assessing model quality.

Model |

Task Type |

Framework |

Source |

Dataset |

|---|---|---|---|---|

Refer to the Get Started with DL Workbench to learn how to import a model and a dataset.

Tip

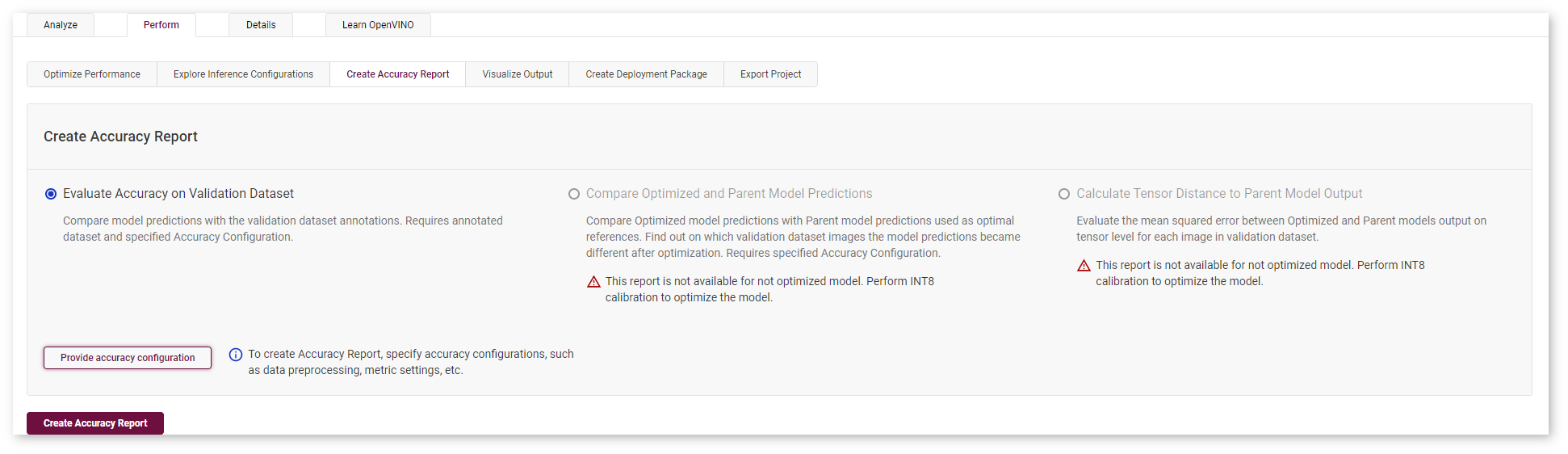

If you have optimized the model using INT8 Calibration, you can use a Not Annotated dataset to compare original and optimized model predictions.

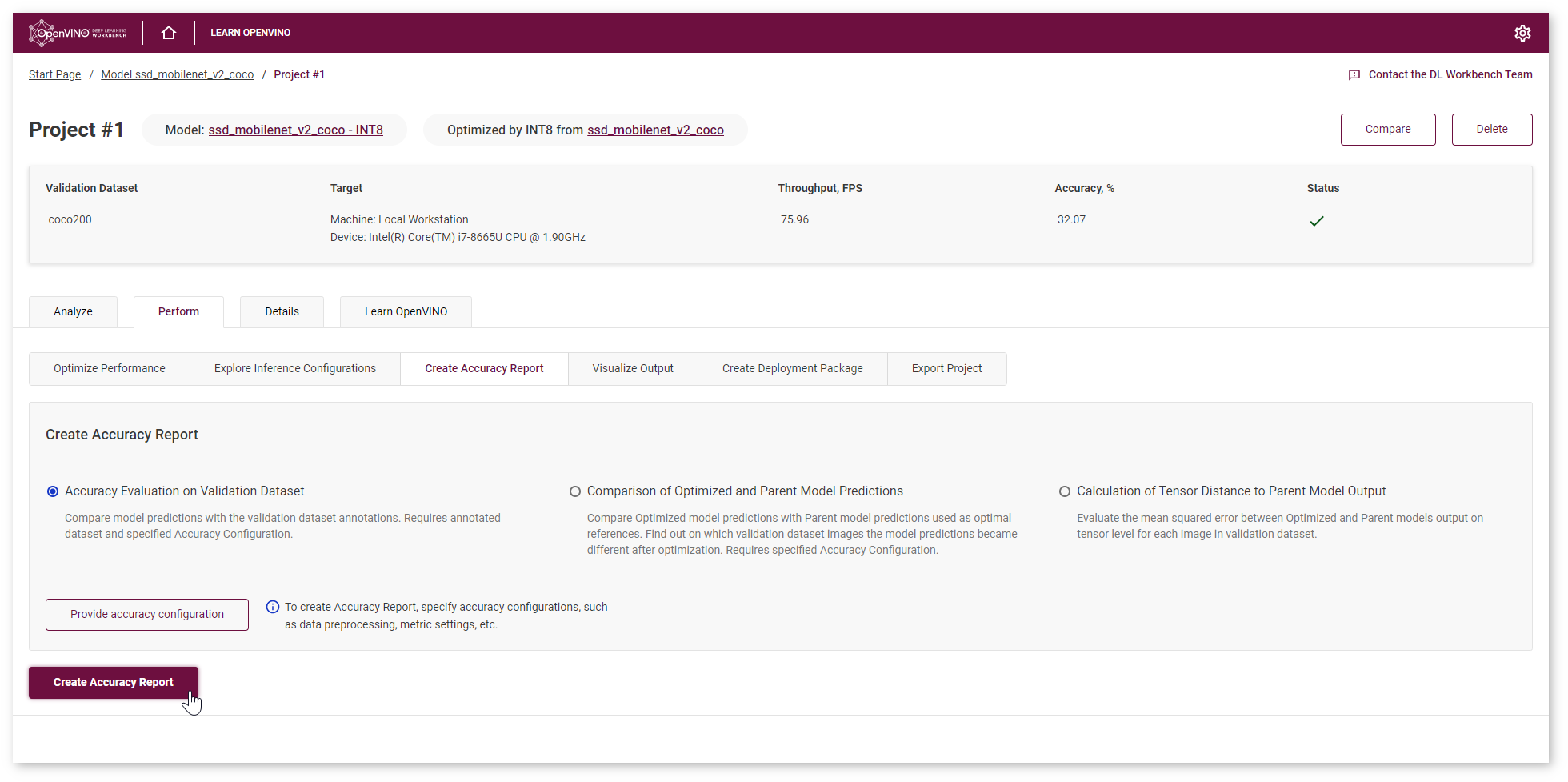

Once you select a model and a dataset and run a baseline inference, the Projects page appears. Go to the Perform tab and select Create Accuracy Report :

Create Accuracy Evaluation on Validation Dataset Report¶

Accuracy Evaluation on Validation Dataset report provides information for evaluating model quality and allows you to compare the model output and validation dataset annotations. Select the report type and click Provide Accuracy Configuration button. Refer to the Accuracy Configurations page to learn about different accuracy settings.

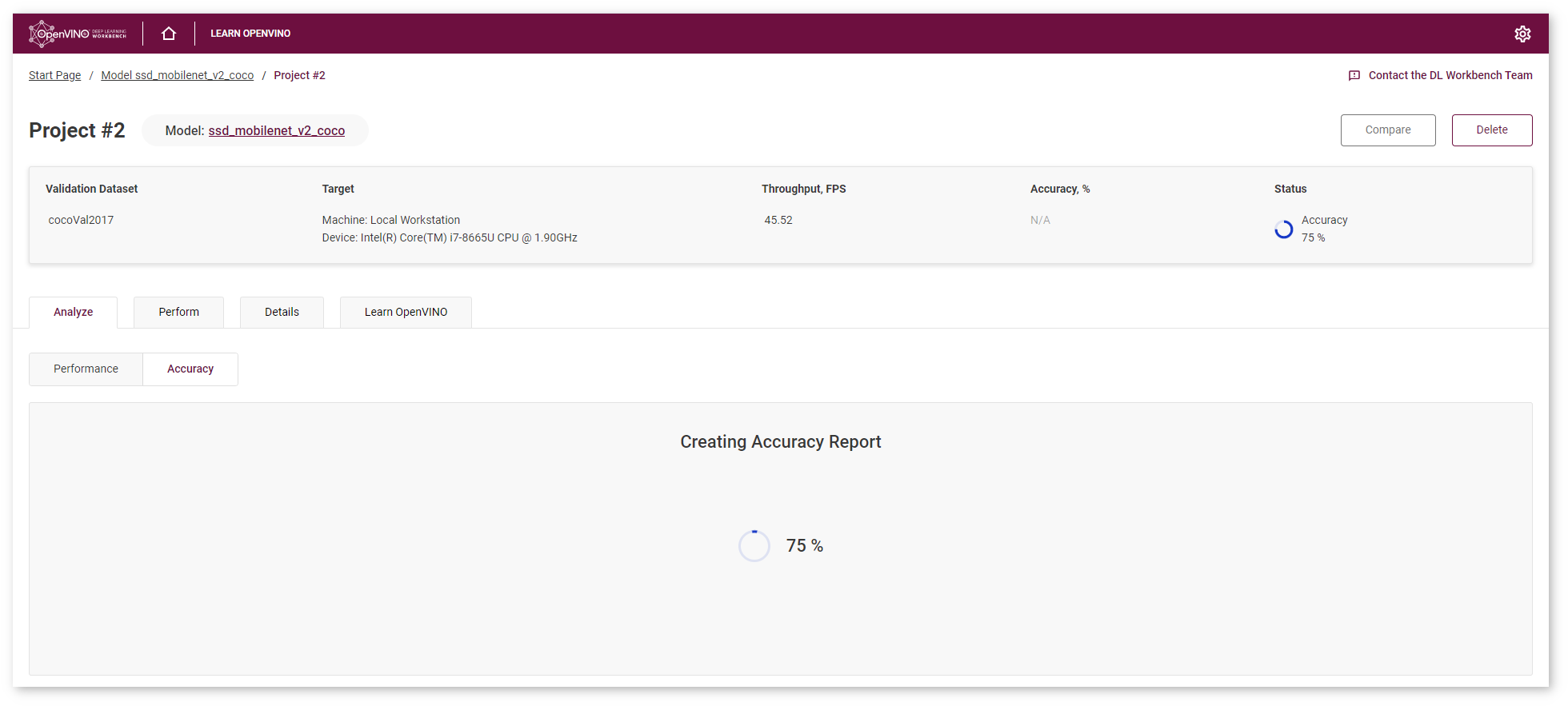

After you click Create Accuracy Report, you will be redirected to the Analyze tab, where you can check the status of Accuracy Report creation:

Note

Accuracy measurements are performed on each dataset image. Creating an Accuracy Report may take some time if the dataset is considerably large.

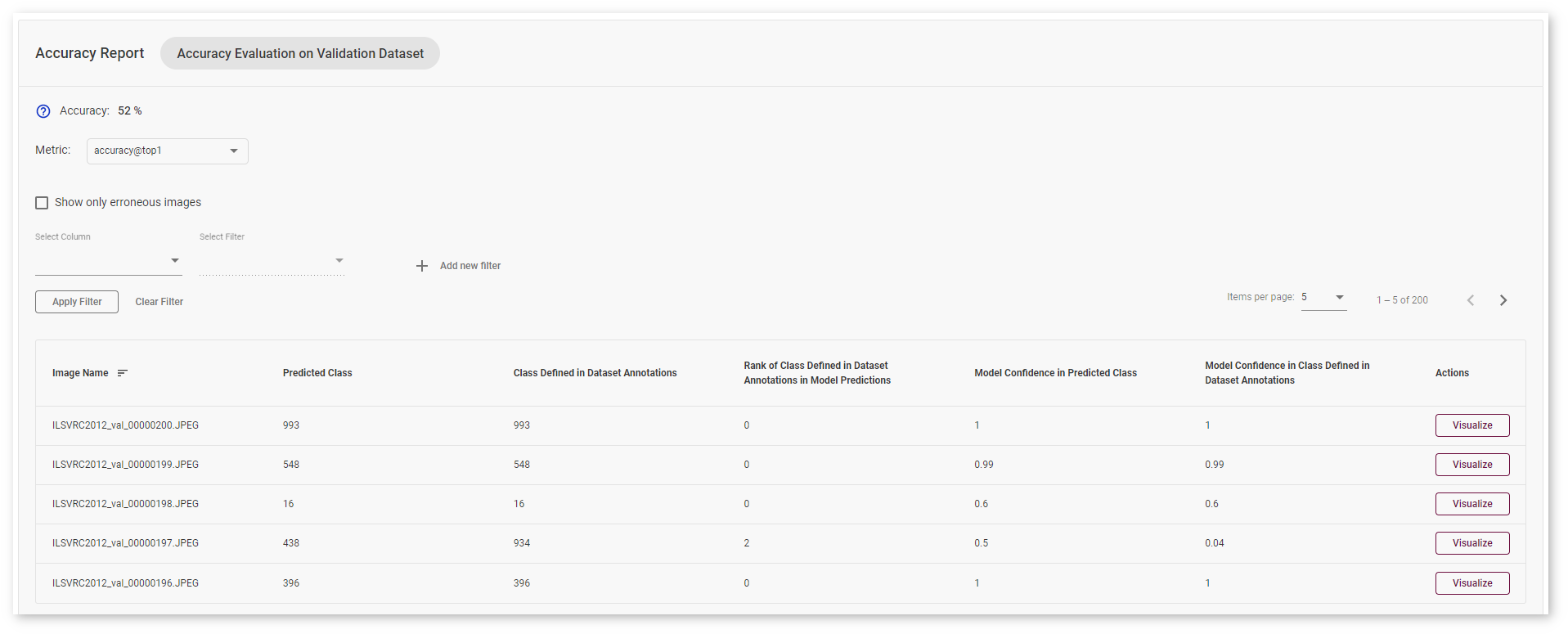

Interpret Report Results¶

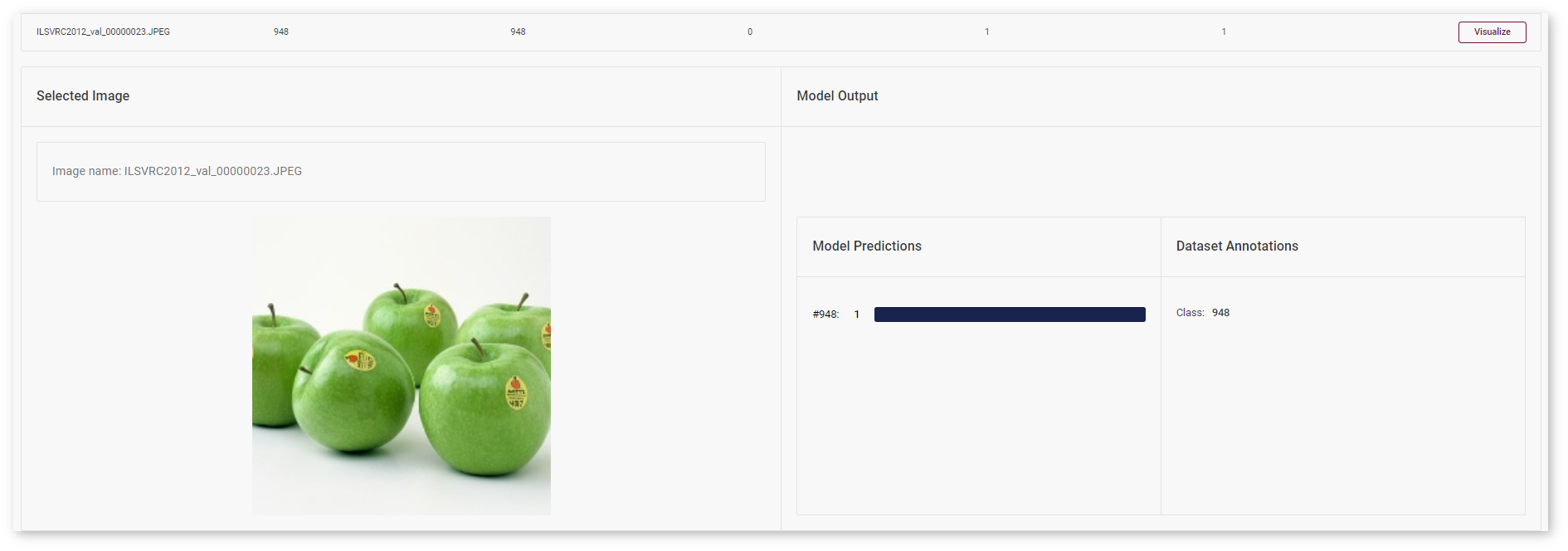

Accuracy is measured on the validation dataset images. The model suggests the Predicted Class of the objects on images. You can compare this class with the Class Defined in Dataset Annotations.

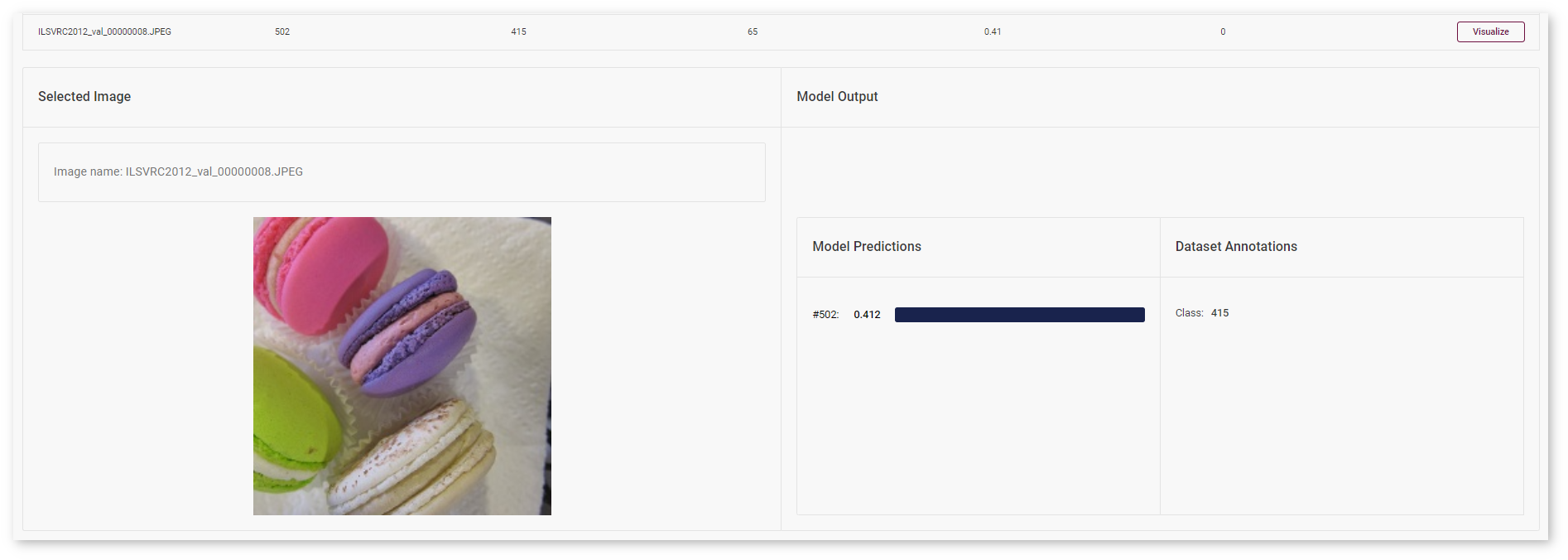

If the classes do not match, the model must be incorrect. To assess the difference between the classes, check the Rank of Class Defined in Dataset Annotations in Model Predictions. You can compare Model Confidence in Predicted Class with Model Confidence in Class Defined in Dataset Annotations.

Click Visualize button under the Actions column to compare the predictions and annotations for a particular image.

In the example image, the squeezenet.1.1 model predicted the same class as specified in dataset annotations (apple) with confidence equaled 1.

Check Show only erroneous images option to display only images where the classes predicted by the model and specified in dataset annotations do not match.

Here the model predicted class 502 (shoes) with a confidence equal to 0.41. The actual class from dataset annotations is 415 (bakery) and has rank 65 in the model predictions as the model confidence for this class equals 0.

Tip

You can enhance your model performance while ensuring that the model accuracy has not decreased dramatically. For that, optimize your model and create an Accuracy Report that allows you to visualize and compare Optimized and Parent model predictions.

All images were taken from ImageNet, Pascal Visual Object Classes, and Common Objects in Context datasets for demonstration purposes only.