Local distribution¶

The local distribution implies that each C or C++ application / installer will have its own copies of OpenVINO Runtime binaries. However, OpenVINO has a scalable plugin-based architecture which implies that some components can be loaded in runtime only if they are really needed. So, it is important to understand which minimal set of libraries is really needed to deploy the application and this guide helps to achieve this goal.

Note

The steps below are operation system independent and refer to a library file name without any prefixes (like lib on Unix systems) or suffixes (like .dll on Windows OS). Do not put .lib files on Windows OS to the distribution, because such files are needed only on a linker stage.

Local dsitribution is also appropriate for OpenVINO binaries built from sources using Build instructions, but the guide below supposes OpenVINO Runtime is built dynamically. For case of Static OpenVINO Runtime select the required OpenVINO capabilities on CMake configuration stage using CMake Options for Custom Compilation, the build and link the OpenVINO components into the final application.

C++ or C language¶

Independently on language used to write the application, openvino must always be put to the final distribution since is a core library which orshectrates with all the inference and frontend plugins. If your application is written with C language, then you need to put openvino_c additionally.

The plugins.xml file with information about inference devices must also be taken as support file for openvino.

Note

in Intel Distribution of OpenVINO, openvino depends on TBB libraries which are used by OpenVINO Runtime to optimally saturate the devices with computations, so it must be put to the distribution package

Pluggable components¶

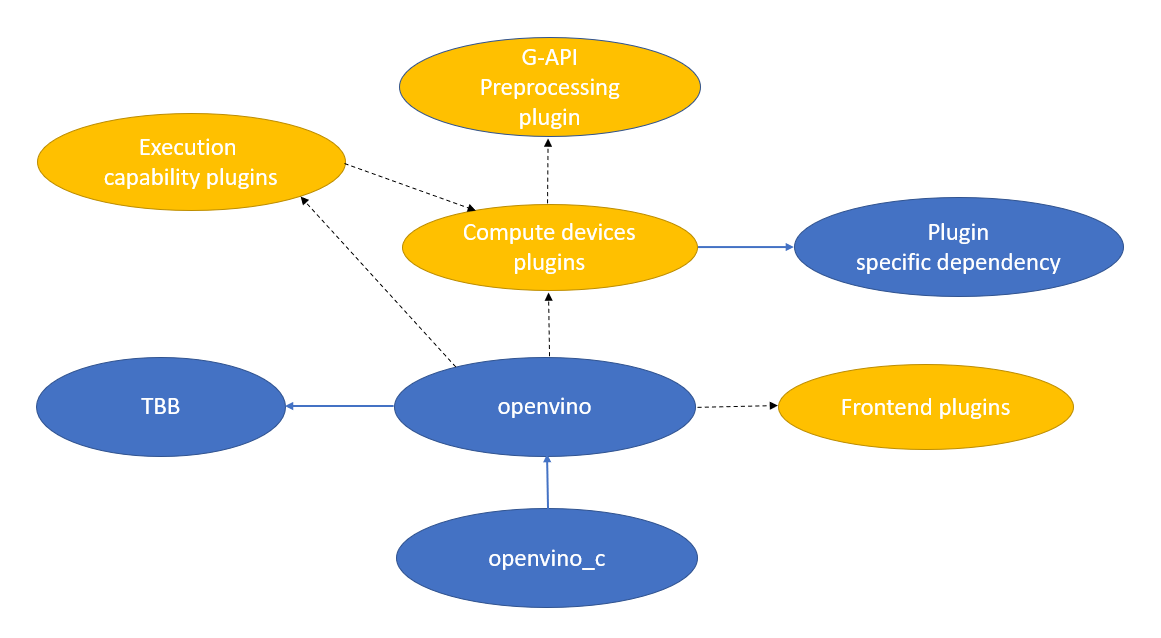

The picture below demonstrates dependnecies between the OpenVINO Runtime core and pluggable libraries:

Compute devices¶

For each inference device, OpenVINO Runtime has its own plugin library:

openvino_intel_cpu_pluginfor Intel CPU devicesopenvino_intel_gpu_pluginfor Intel GPU devicesopenvino_intel_gna_pluginfor Intel GNA devicesopenvino_intel_myriad_pluginfor Intel MYRIAD devicesopenvino_intel_hddl_pluginfor Intel HDDL deviceopenvino_arm_cpu_pluginfor ARM CPU devices

Depending on what devices is used in the app, put the appropriate libraries to the distribution package.

As it is shown on the picture above, some plugin libraries may have OS-specific dependencies which are either backend libraries or additional supports files with firmware, etc. Refer to the table below for details:

Device |

Dependency |

|---|---|

CPU |

|

GPU |

|

MYRIAD |

|

HDDL |

|

GNA |

|

Arm® CPU |

|

Device |

Dependency |

|---|---|

CPU |

|

GPU |

|

MYRIAD |

|

HDDL |

|

GNA |

|

Arm® CPU |

|

Device |

Dependency |

|---|---|

CPU |

|

MYRIAD |

|

Arm® CPU |

|

Execution capabilities¶

HETERO, MULTI, BATCH, AUTO execution capabilities can also be used explicitly or implicitly by the application. Use the following recommendation scheme to decide whether to put the appropriate libraries to the distribution package:

If AUTO is used explicitly in the application or

ov::Core::compile_modelis used without specifying a device, put theopenvino_auto_pluginto the distributionNote

Auto device selection relies on inference device plugins, so if are not sure what inference devices are available on target machine, put all inference plugin libraries to the distribution. If the

ov::device::prioritiesis used forAUTOto specify a limited device list, grab the corresponding device plugins only.If MULTI is used explicitly, put the

openvino_auto_pluginto the distributionIf HETERO is either used explicitly or

ov::hint::performance_modeis used with GPU, put theopenvino_hetero_pluginto the distributionIf BATCH is either used explicitly or

ov::hint::performance_modeis used with GPU, put theopenvino_batch_pluginto the distribution

Reading models¶

OpenVINO Runtime uses frontend libraries dynamically to read models in different formats:

To read OpenVINO IR

openvino_ir_frontendis usedTo read ONNX file format

openvino_onnx_frontendis usedTo read Paddle file format

openvino_paddle_frontendis used

Depending on what types of model file format are used in the application in ov::Core::read_model, peek up the appropriate libraries.

Note

The recommended way to optimize the size of final distribution package is to convert models using Model Optimizer to OpenVINO IR, in this case you don’t have to keep ONNX, Paddle and other frontend libraries in the distribution package.

(Legacy) Preprocessing via G-API¶

Note

G-API preprocessing is a legacy functionality, use preprocessing capabilities from OpenVINO 2.0 which do not require any additional libraries.

If the application uses InferenceEngine::PreProcessInfo::setColorFormat or InferenceEngine::PreProcessInfo::setResizeAlgorithm methods, OpenVINO Runtime dynamically loads openvino_gapi_preproc plugin to perform preprocessing via G-API.

Examples¶

CPU + IR in C-written application¶

C-written application performs inference on CPU and reads models stored as OpenVINO IR:

openvino_clibrary is a main dependency of the application. It links against this libraryopenvinois used as a private dependency foropenvinoand also used in the deploymentopenvino_intel_cpu_pluginis used for inferenceopenvino_ir_frontendis used to read source model

MULTI execution on GPU and MYRIAD in tput mode¶

C++ written application performs inference simultaneously on GPU and MYRIAD devices with ov::hint::PerformanceMode::THROUGHPUT property, reads models stored in ONNX file format:

openvinolibrary is a main dependency of the application. It links against this libraryopenvino_intel_gpu_pluginandopenvino_intel_myriad_pluginare used for inferenceopenvino_auto_pluginis used forMULTImulti-device executionopenvino_auto_batch_plugincan be also put to the distribution to improve saturation of Intel GPU device. If there is no such plugin, Automatic batching is turned off.openvino_onnx_frontendis used to read source model

Auto device selection between HDDL and CPU¶

C++ written application performs inference with automatic device selection with device list limited to HDDL and CPU, model is created using C++ code :

openvinolibrary is a main dependency of the application. It links against this libraryopenvino_auto_pluginis used to enable automatic device selection featureopenvino_intel_hddl_pluginandopenvino_intel_cpu_pluginare used for inference,AUTOselects between CPU and HDDL devices according to their physical existance on deployed machine.No frontend library is needed because

ov::Modelis created in code.