Import Original Model Recommendations¶

Use the following recommendations to make the process of importing original models easier:

-

Сheck whether the model format is supported in the DL Workbench, for example, supported formats include TensorFlow*, ONNX*, OpenVINO™ Intermediate Representation (IR), and others.

Convert model to supported format

Optional. Convert the model if its format is not directly supported.

-

Obtain a model in Intermediate Representation (IR) format to start working with the DL Workbench.

1. Check Model Format¶

The import process depends on the framework of your model. Examples of the supported formats: TensorFlow* (Frozen graph, Checkpoint, Metagraph, SavedModel, Keras H5), ONNX*, OpenVINO™ IR.

If the model is already in the IR format, no further steps are required. Import the model files and proceed to create a project.

Optional. If the model is in one of the supported formats, go to Step 3 and import it in the DL Workbench to obtain a model in IR format.

If the model is not in one of the supported formats, proceed to the next step to convert it to a supported format and then to IR.

2. Convert Model to Supported Format¶

To convert the model to one of the input formats supported in the DL Workbench, find a specific format you need to use for your model:

If the model is from PyTorch framework, it is recommended to convert it to the ONNX format.

If the model is trained in another framework, look for a similar topology supported in the Open Model Zoo (OMZ).

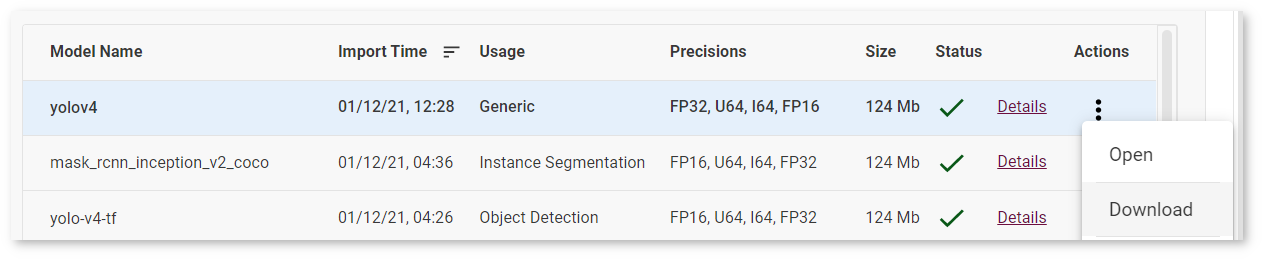

Open

model.ymlfile in the OMZ repository, where you can find the information on:which input format should be used for the model of your framework

how to convert the model to this format

how to configure accuracy of the model

In the

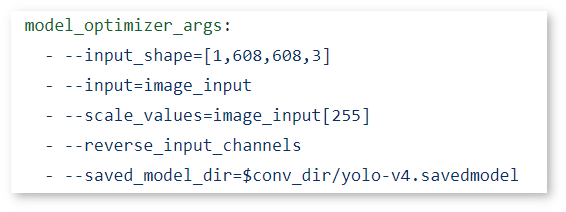

model.ymlfile find the Model Optimizer arguments required to convert the model:

Pay attention to the file extension of the model (for example,

.savedmodel,.onnx,.pb, and others). You need to convert your model to the specified format. In the example above, you can see that yolov4 needs to be converted to the TensorFlow.savedmodelformat.

If there is no similar model for your use case, it is recommended to use either ONNX or TensorFlow formats. Refer to the Model Optimizer Developer Guide to learn more general information on importing models from different frameworks.

3. Import Model in DL Workbench¶

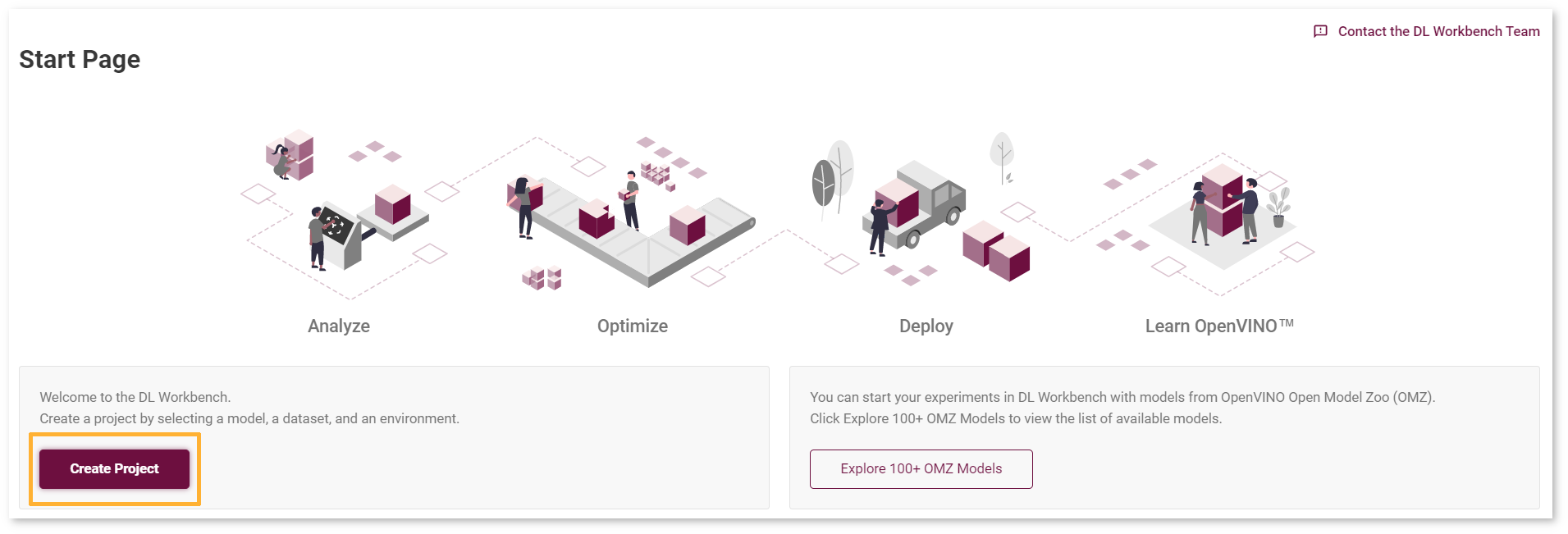

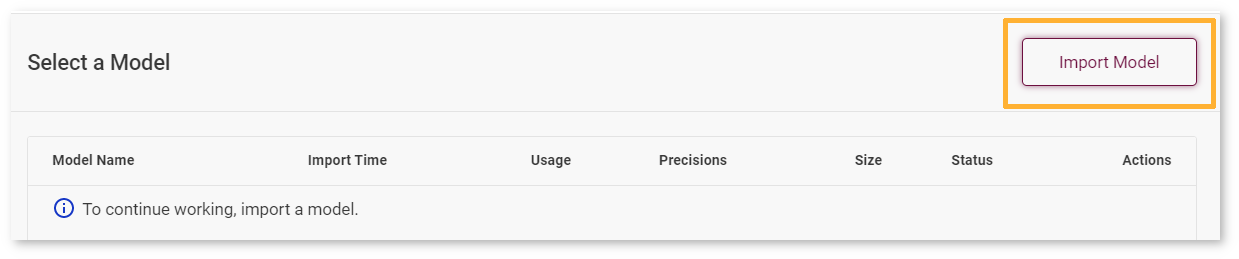

As a result of importing your model in the DL Workbench, you will obtain the model in IR format. For that, go to the DL Workbench and click Create Project and Import Model.

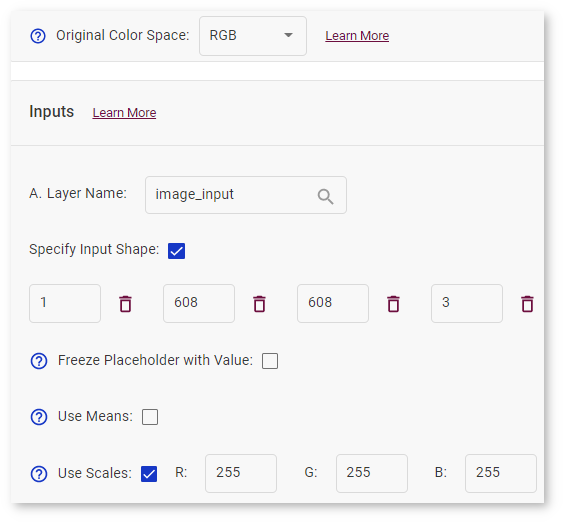

Select the framework and provide the folder with the model in one of the supported format. Next, you need to fill out the import form in the DL Workbench. This information concerns the model training and depends on the framework, for example, the way the input images were fed to the Computer Vision model during training:

color space (BGR, RGB, Grayscale)

applied transformations (mean subtraction, scale factor multiplication)

After importing your model in the DL Workbench, you can download the IR model and work with OpenVINO components or proceed to accelerate performance, visualize model architecture and outputs, benchmark inferencing performance, measure accuracy, and explore other DL Workbench capabilities.