Create Accuracy Report¶

Note

Accuracy Measurements are not available for Natural Language Processing models.

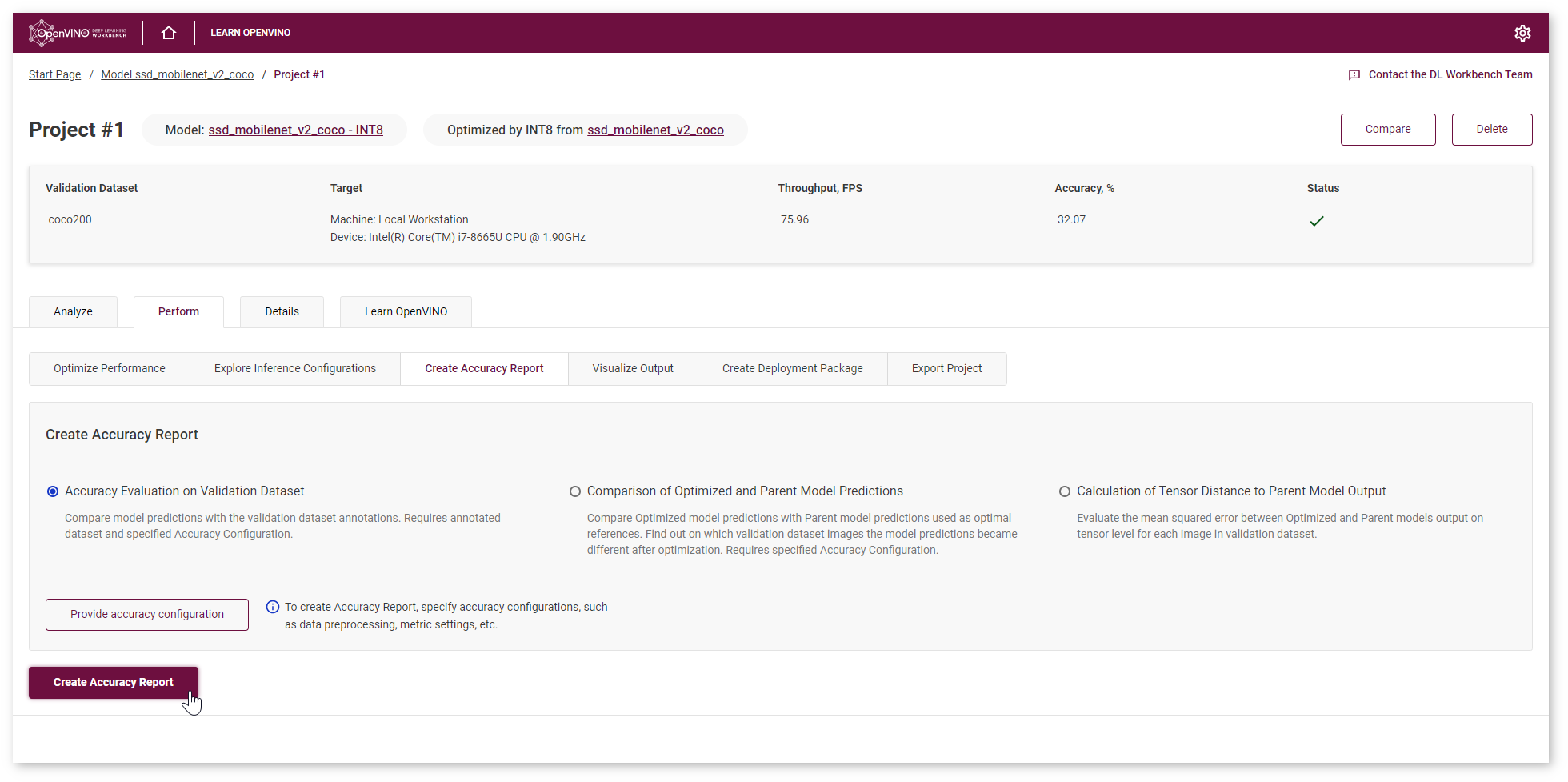

Once you select a model and a dataset and run a baseline inference, the Projects page appears. Go to the Perform tab and select Create Accuracy Report :

Accuracy Report Types¶

In the DL Workbench, you can create the following reports:

Requirement |

Accuracy Evaluation on Validation Dataset |

Comparison of Optimized and Parent Model Predictions |

Calculation of Tensor Distance to Parent Model Output |

|---|---|---|---|

Model |

Original or Optimized |

Optimized |

Optimized |

Dataset |

Annotated |

Annotated or Not Annotated |

Annotated or Not Annotated |

Use Case |

Classification, Object-Detection, Instance-Segmentation, Semantic-Segmentation, Super-Resolution, Style-Transfer, Image-Inpainting |

Classification, Object-Detection, Instance-Segmentation, Semantic-Segmentation |

Classification, Object-Detection, Instance-Segmentation, Semantic-Segmentation, Super-Resolution, Style-Transfer, Image-Inpainting |

Accuracy Configuration |

Required |

Not Required |

Not Required |

Accuracy Evaluation on Validation Dataset¶

Accuracy Evaluation on Validation Dataset report provides information for evaluating model quality and allows you to compare the model output and validation dataset annotations. This type of report is explained in details in the Object Detection and Classification model tutorials.

Comparison of Optimized and Parent Model Predictions¶

To get other types of Accuracy Report, you need to [optimize the model](Int-8_Quantization). Comparison of Optimized and Parent Model Predictions Report allows you to find out on which validation dataset images the predictions of the model became different after optimization. This type of report is explained in details in the Optimize Object Detection model and Optimize Classification model tutorials.

Calculation of Tensor Distance to Parent Model Output¶

Tensor Distance Calculation Report allows to evaluate the mean squared error (MSE) between Optimized and Parent models output on tensor level for each image in the validation dataset. Mean Squared Error (MSE) is an average of the square of the difference between actual and estimated values. MSE evaluation enables you to identify significant differences between Parent and Optimized model predictions for a wider set of use cases besides classification and object detection. This type of report is explained in details in the Optimize Style Transfer model tutorial.